Table of Contents

Introduction: Machine Learning in AI – How Artificial Intelligence Learns From Experience

In 1997, Deep Blue defeated world chess champion Garry Kasparov using brute force computation and millions of pre-programmed rules. The machine could not learn from its mistakes or improve beyond what engineers built into it. Twenty years later, AlphaGo defeated the world champion Go player using a different approach entirely. It learned by playing millions of games against itself, discovering strategies no human had ever conceived. The difference between these two systems reveals something fundamental about modern artificial intelligence.

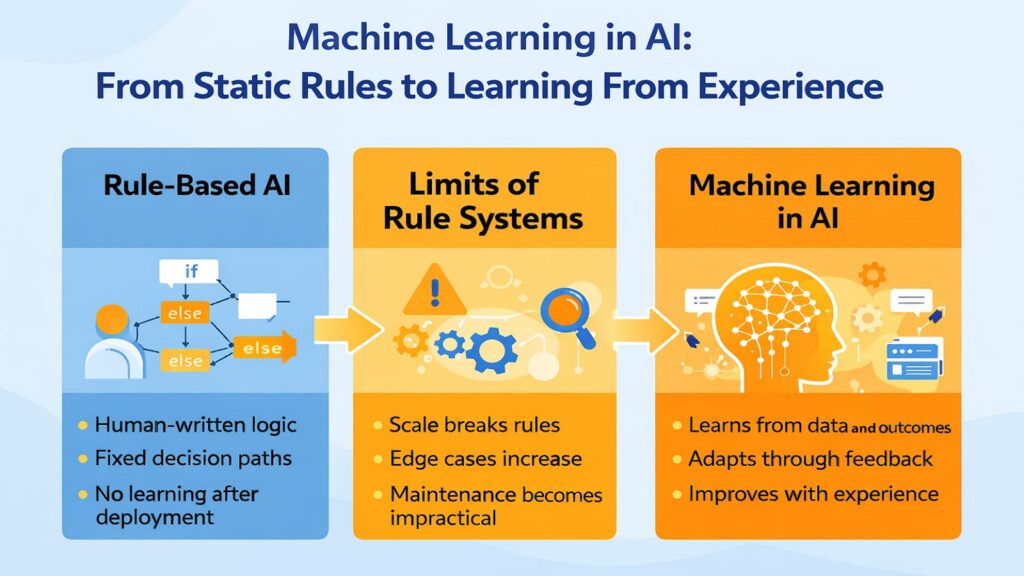

Machine Learning in AI represents the shift from static instruction to dynamic adaptation. Early AI systems functioned like elaborate flowcharts, following predetermined paths that human programmers had carefully mapped. Every possibility had to be anticipated and coded in advance. Modern AI systems learn from experience instead. They adjust their behavior based on outcomes, refine their understanding through exposure to new data, and improve their performance without being explicitly reprogrammed for each new situation.

This article explores six practical ways that Machine Learning in AI enables systems to evolve through experience rather than explicit programming. Each section examines a different learning mechanism, supported by real-world examples that show how artificial intelligence adapts to complex environments. From feedback loops that correct errors to probabilistic reasoning under uncertainty, these learning approaches collectively explain why modern AI continues to expand its capabilities over time.

Core Sub-Systems of Machine Learning in AI

| Sub-Systems | Function |

|---|---|

| Supervised Learning | Learning from labeled examples where correct outputs are provided during training |

| Unsupervised Learning | Discovering patterns and structures in data without explicit labels or guidance |

| Reinforcement Learning | Learning through trial and error by receiving rewards or penalties for actions |

| Neural Networks | Interconnected layers of computational units that process information hierarchically |

| Feature Engineering | Identifying and transforming relevant data attributes that improve learning outcomes |

| Model Optimization | Adjusting internal parameters to minimize prediction errors across training data |

| Validation Framework | Testing model performance on unseen data to ensure generalization capability |

| Inference Engine | Applying learned patterns to make predictions or decisions on new inputs |

1. Machine Learning in AI as the Learning Engine Behind Adaptation

Traditional software operates within fixed boundaries. An inventory management system operates under the same principles, regardless of whether it handles ten transactions or ten million. It cannot notice seasonal patterns, adapt to supplier delays, or learn from forecasting errors. The system performs exactly as programmed until a developer manually updates the code. This rigidity made early AI systems fragile when facing unexpected situations or changing conditions.

Machine Learning in AI fundamentally changes this limitation by enabling systems to modify their behavior through exposure to data. The system observes inputs, generates outputs, measures the accuracy of those outputs, and adjusts its internal logic to improve future performance. This cycle continues automatically without human intervention. A medical imaging system improves its diagnostic accuracy as it analyzes more scans. A voice assistant becomes better at understanding regional accents through millions of user interactions. The learning happens during operation, not just during initial development.

Early chatbots demonstrate this contrast clearly. ELIZA, created at MIT in the 1960s, used pattern matching and substitution rules to simulate conversation. It could recognize keywords like “mother” or “sad” and respond with pre-written phrases. The responses felt mechanical because the system could not learn from interactions. It gave identical answers to similar questions regardless of context or previous exchanges. Modern conversational AI, like GPT-based assistants learn from vast corpora of human dialogue. They adapt responses based on conversation flow, recognize nuanced language patterns, and improve through continued training on diverse interactions.

The adaptation capability extends beyond language. Streaming platforms adjust recommendations based on viewing patterns. Autonomous vehicles refine their decision-making after processing millions of miles of driving data. Financial trading systems modify their strategies as market conditions evolve. In each case, the system becomes more capable over time without requiring programmers to anticipate every scenario and write explicit rules for handling it.

Evolution of Adaptation in Machine Learning in AI

| Aspect | Description |

|---|---|

| Pre-Deployment Learning | Models trained on historical data before being deployed to production environments |

| Continuous Learning | Systems that update their knowledge incrementally as new data becomes available |

| Transfer Learning | Applying knowledge gained from one domain to improve performance in related areas |

| Online Learning | Real-time model updates that occur during operation rather than batch retraining |

| Meta-Learning | Learning how to learn by optimizing the learning process itself across multiple tasks |

| Adaptive Algorithms | Self-adjusting mechanisms that change learning rates or strategies based on performance |

2. Machine Learning in AI and the Shift From Rules to Experience

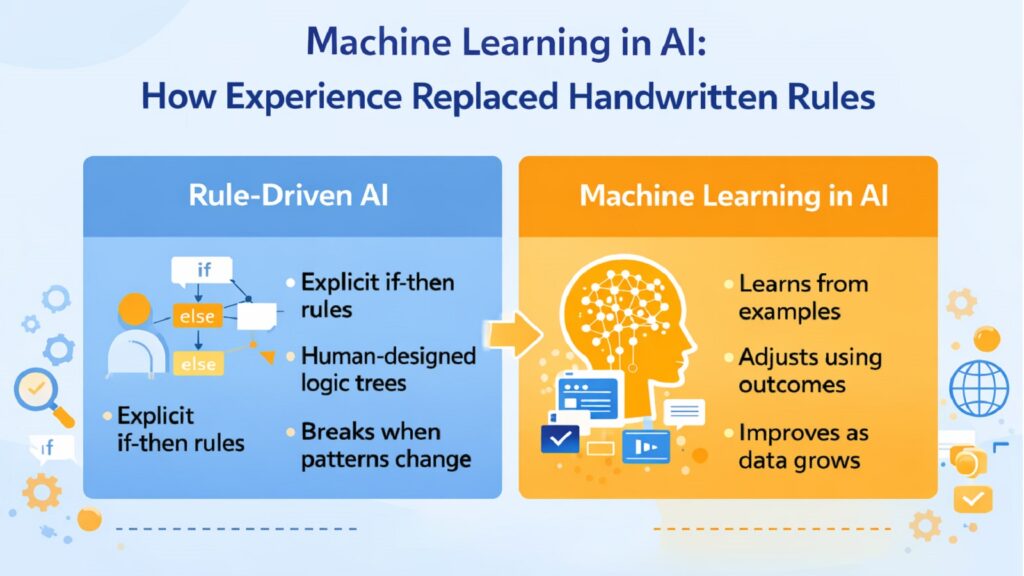

Traditional AI development required domain experts to articulate their knowledge as formal rules. Building a loan approval system meant interviewing underwriters, documenting their decision criteria, and translating that expertise into conditional logic. If the credit score exceeds 700 and debt-to-income ratio stays below 40 percent, and the employment history spans at least two years, then approve the application. Engineers spent months capturing these rules, yet the system still struggled with edge cases that fell outside documented patterns.

Machine Learning in AI inverts this approach by learning directly from examples and outcomes. Instead of writing rules, developers provide the system with thousands of historical loan applications along with their approval or rejection decisions. The learning algorithm identifies patterns that distinguish approved applications from rejected ones. It discovers which combinations of factors predict successful loan repayment without anyone explicitly programming those relationships. The knowledge emerges from data rather than being imposed through manual rule specification.

Email spam filtering illustrates this shift powerfully. Early spam filters used keyword lists and simple heuristics. Messages containing “free money” or “click here now” got flagged as spam. Spammers quickly adapted by misspelling words, using images instead of text, or employing more subtle language. Filter developers played constant catch-up, updating rules to match new spam tactics. This arms race favored attackers because they only needed to find one loophole while defenders needed comprehensive coverage.

Modern spam filters learn from millions of emails that users have marked as spam or legitimate. The system identifies subtle statistical patterns that distinguish unwanted messages from genuine correspondence. It recognizes that legitimate emails from financial institutions have certain structural characteristics, legitimate marketing follows specific unsubscribe protocols, and phishing attempts exhibit particular linguistic patterns. These distinctions emerge from analyzing examples rather than from someone writing rules about what constitutes spam. When spammers change tactics, the filter adapts by learning from newly marked examples.

Search engines underwent a similar transformation. Early systems ranked pages by counting keyword matches and analyzing link structures according to fixed formulas. Results improved when engineers tweaked ranking algorithms, but each adjustment required technical expertise and testing. Modern search systems learn ranking from billions of user interactions. They observe which results people click, how long they stay on clicked pages, and whether they refine their search afterward. These behavioral signals train the ranking algorithm to surface more relevant results without anyone specifying rules about what makes a webpage relevant.

Transition Mechanisms in Machine Learning in AI

| Elements of Machine Learning in AI | Implementation |

|---|---|

| Training Data Collection | Gathering representative examples that capture the range of situations the system will encounter |

| Pattern Recognition | Identifying statistical regularities and correlations within training examples automatically |

| Decision Boundary Learning | Determining thresholds and criteria that separate different outcomes based on input features |

| Generalization Capability | Extending learned patterns to handle new situations not present in original training data |

| Exception Handling | Managing outliers and unusual cases through probabilistic reasoning rather than explicit rules |

| Performance Monitoring | Tracking prediction accuracy and model behavior to identify when retraining becomes necessary |

3. Machine Learning in AI Through Feedback Loops and Error Correction

Feedback serves as the primary learning signal in Machine Learning in AI systems. The system makes a prediction, observes the actual outcome, measures the difference between prediction and reality, and adjusts its internal logic to reduce future errors. This cycle repeats across thousands or millions of examples, gradually refining the system’s behavior. Without feedback, learning cannot occur because the system has no way to distinguish better predictions from worse ones.

Netflix’s recommendation engine demonstrates feedback-driven learning at a massive scale. When users watch a recommended show, the system receives positive feedback. When they browse recommendations but watch something else instead, that signals the suggestions missed the mark. When they stop watching after a few minutes, that indicates poor matching between the recommendation and the preference. The system processes millions of these feedback signals daily, learning which content attributes predict viewer satisfaction for different user segments.

The learning happens through mathematical optimization. The recommendation model contains millions of parameters that influence which content gets suggested. Each piece of feedback slightly adjusts these parameters in directions that would have produced better recommendations. A user who watched and enjoyed a recommended documentary causes small adjustments that make the system slightly more likely to recommend similar documentaries in the future. A user who ignores thriller recommendations causes adjustments that decrease thriller suggestions for that profile. These tiny adjustments accumulate across billions of interactions, steadily improving recommendation accuracy.

Speech recognition systems improve through similar feedback mechanisms. Early systems struggled with accents, background noise, and unconventional phrasing. Modern systems achieve high accuracy because they learn from corrections. When voice assistants misunderstand commands and users rephrase or correct them, those corrections become training data. The system learns that “play songs by the beetles” likely means “play songs by The Beatles” despite the spelling difference. It learns that certain acoustic patterns in noisy environments correspond to specific words. Each correction refines the model’s understanding of speech variability.

Medical imaging support systems learn from radiologist feedback. An AI system analyzing chest X-rays might flag a region as potentially concerning. A radiologist reviews the image and either confirms the finding or determines it represents normal variation. This expert feedback trains the system to distinguish subtle abnormalities from benign patterns. According to research published by Stanford University, deep learning systems trained on over 100,000 chest X-rays with radiologist annotations achieved diagnostic performance comparable to practicing radiologists. The learning emerged from systematic feedback rather than from programming specific visual features to detect.

Feedback Mechanisms in Machine Learning in AI

| Feedback Types in Machine Learning in AI | Application |

|---|---|

| Explicit User Feedback | Direct ratings, corrections, or confirmations that users provide to indicate satisfaction |

| Implicit Behavioral Signals | Actions like clicks, dwell time, or purchases that reveal preferences without explicit rating |

| Supervised Ground Truth | Expert-labeled data that provides authoritative correct answers for training purposes |

| Error Gradient Calculation | Mathematical measurement of prediction accuracy that guides parameter adjustments |

| Reinforcement Signals | Rewards or penalties that indicate how well actions achieved desired objectives |

| A/B Testing Results | Comparative performance data from testing different model versions with real users |

4. Machine Learning in AI and Learning Under Uncertainty

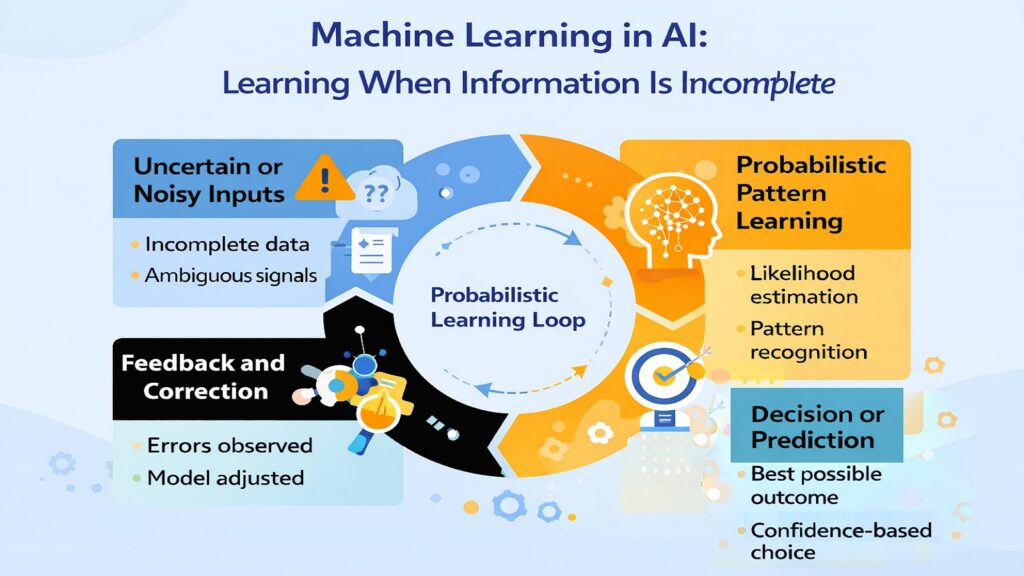

Real-world data rarely arrives clean, complete, or certain. Sensor readings contain noise. User inputs have typos and ambiguities. Historical records have gaps and inconsistencies. Traditional rule-based systems struggle with this messiness because rules typically assume precise inputs and clear conditions. Machine Learning in AI handles uncertainty naturally by learning probabilistic relationships rather than deterministic rules. Instead of declaring that specific inputs always produce specific outputs, learning systems estimate the likelihood of different outcomes given available evidence.

Credit card fraud detection operates entirely in uncertain territory. Legitimate purchases vary wildly based on individual spending patterns, travel schedules, and life circumstances. Fraudulent transactions try to mimic legitimate behavior to avoid detection. No single transaction feature definitively indicates fraud. A large purchase from a foreign country might represent vacation spending or stolen card use. Multiple small transactions could indicate normal daily activity or card testing by thieves.

Machine Learning in AI addresses this uncertainty by learning probability distributions from millions of transactions. The system develops sophisticated models of normal spending patterns for different customer segments. It recognizes that what seems unusual for one cardholder might be typical for another. When evaluating a transaction, the system estimates the probability of fraud based on dozens of factors, including transaction amount, merchant category, geographic location, time since last transaction, and deviation from historical patterns. Rather than making binary fraud or legitimate classifications, modern systems assign probability scores that fraud analysts use to prioritize investigations.

Medical diagnosis assistance faces similar uncertainty challenges. Symptoms overlap across multiple conditions. Test results have false positive and false negative rates. Patient histories contain incomplete information. Machine Learning in AI systems trained on large medical databases learn to estimate disease probabilities given symptom combinations and test results. A study by researchers at Mount Sinai Health System found that deep learning models trained on electronic health records could predict diseases like liver failure and heart problems months in advance with reasonable accuracy. The system learned which combinations of vital signs, lab values, and medical history predict future health events, even though no single indicator provides certainty.

Demand forecasting for retail and manufacturing relies on learning under uncertainty. Future sales depend on weather, economic conditions, competitor actions, marketing effectiveness, and countless other factors that cannot be known in advance. Machine Learning in AI systems analyzes historical sales data alongside contextual information to estimate demand probability distributions. According to McKinsey research, companies using machine learning for supply chain forecasting have reduced forecasting errors by 30 to 50 percent compared to traditional statistical methods. The improvement comes from learning complex patterns in noisy data rather than relying on simplified assumptions about demand stability.

Uncertainty Management in Machine Learning in AI

| Challenges in Machine Learning in AI | Solution Approach |

|---|---|

| Incomplete Data | Learning from partially observed examples and making inferences about missing information |

| Noisy Measurements | Statistical techniques that distinguish signal from random variation in sensor data |

| Ambiguous Inputs | Probabilistic reasoning that considers multiple interpretations and their relative likelihoods |

| Distribution Shift | Detecting when data patterns change over time and adapting models accordingly |

| Confidence Estimation | Quantifying prediction uncertainty so downstream systems can account for reliability |

| Rare Event Prediction | Specialized learning techniques that handle imbalanced datasets with few positive examples |

5. Machine Learning in AI at Scale Across Millions of Interactions

Scale transforms learning capabilities in ways that smaller datasets cannot achieve. A system trained on 1,000 examples learns basic patterns but struggles with variations and edge cases. A system trained on 10 million examples develops a nuanced understanding of rare situations, subtle distinctions, and complex interactions between variables. Machine Learning in AI becomes dramatically more capable as data volume increases because larger datasets contain more diverse examples and more complete coverage of the problem space.

Language translation systems demonstrate the scale’s importance clearly. Early machine translation used linguistic rules and bilingual dictionaries created by experts. Quality remained mediocre because languages have too many exceptions, idioms, and context-dependent meanings for rule-based approaches to capture. Modern neural translation systems learn from billions of translated sentence pairs. They discover translation patterns that linguists never explicitly documented. According to Google’s research, their neural machine translation system trained on massive parallel text corpora improved translation quality by 55 to 85 percent compared to previous phrase-based systems when evaluated by human raters.

The learning happens across millions of diverse contexts. The system sees how “bank” translates differently when discussing financial institutions versus river edges. It learns that some phrases translate literally while others require cultural adaptation. It discovers that sentence structure often needs significant reordering when translating between language families. These lessons emerge from observing countless translation examples rather than from programmed grammar rules. Scale provides the diversity needed to learn these subtleties.

E-commerce personalization leverages scale to create individualized experiences for millions of customers simultaneously. Amazon’s recommendation system processes billions of customer interactions, including purchases, searches, browsing patterns, wish list additions, and product reviews. The system learns taste profiles for hundreds of millions of users and relationships between millions of products. It discovers that customers who buy certain kitchen appliances often purchase specific cookbooks months later. It learns which product features matter most for different customer segments. This granular understanding only becomes possible with massive scale.

The personalization goes beyond simple “customers who bought X also bought Y” patterns. The system learns temporal sequences, seasonal variations, and cross-category relationships. It recognizes that buying camping equipment in spring predicts outdoor book purchases. It understands that customers browsing baby products might need different recommendations six months later. Research from McKinsey indicates that personalization engines drive 35 percent of Amazon’s revenue, demonstrating how learning at scale creates substantial business value.

Image recognition achieved breakthrough performance through scale. ImageNet, a dataset containing over 14 million labeled images across 20,000 categories, enabled deep learning systems to surpass human-level accuracy on object recognition tasks. The scale allowed neural networks to learn subtle visual features that distinguish similar objects. The system learned to recognize hundreds of dog breeds by observing thousands of examples of each breed in different poses, lighting conditions, and backgrounds. This visual understanding only emerged through exposure to massive, diverse training data.

Scale Effects in Machine Learning in AI

| Dimension | Impact |

|---|---|

| Data Volume Growth | More examples enable learning of rare patterns and unusual edge cases with confidence |

| Coverage Improvement | Larger datasets contain more complete representation of real-world variation and diversity |

| Model Complexity | More data supports training of models with millions of parameters without overfitting |

| Generalization Quality | Systems trained on diverse large-scale data perform better on previously unseen inputs |

| Feature Discovery | Automatic learning of relevant data attributes that human designers might not consider |

| Performance Scaling | Accuracy and capability continue improving as training data volume increases further |

6. Machine Learning in AI and Its Real-World Limits

Machine Learning in AI delivers impressive results across many domains, but important limitations constrain its capabilities. Understanding these boundaries matters for deploying AI responsibly and setting appropriate expectations. The same learning mechanisms that enable powerful pattern recognition also create blind spots and failure modes that developers and users need to recognize.

Rare event prediction challenges learning systems fundamentally. Machine learning models learn from frequency and repetition. They need many examples to identify reliable patterns. When critical events occur infrequently, training data contains few examples for the system to learn from. Predicting earthquakes, financial market crashes, or equipment failures that happen once per decade presents this problem. The system lacks sufficient experience with these rare events to develop accurate predictive models. Traditional statistical approaches sometimes outperform machine learning for rare event forecasting because they can incorporate domain expertise rather than relying solely on observational frequency.

Causal reasoning remains difficult for learning systems. Machine Learning in AI excels at identifying correlations and associations in data. It struggles to distinguish genuine causal relationships from spurious correlations. Ice cream sales and drowning deaths both increase during the summer months. A learning system might identify this correlation without understanding that warm weather causes both phenomena independently. Medical AI systems might notice that patients who receive a certain treatment have worse outcomes, not realizing that sicker patients receive that treatment precisely because their condition is more severe. The correlation exists, but the causal interpretation misleads.

Adversarial vulnerability exposes learning system fragility. Small, carefully crafted changes to inputs can cause dramatic prediction errors. Researchers have demonstrated that adding imperceptible noise to images causes vision systems to misclassify objects with high confidence. Stop signs with strategically placed stickers get recognized as speed limit signs. These adversarial examples work because machine learning models learn complex decision boundaries that humans do not share. Attackers can exploit these learned boundaries in ways that fool the system while remaining invisible to human observers.

Distribution shift degrades performance when deployed systems encounter data that differs from training data. A loan approval model trained on applicants from one geographic region might perform poorly when deployed in a different region with different economic conditions and demographics. A medical imaging system trained on one hospital’s equipment might struggle with scans from different manufacturers using different protocols. The system learned patterns specific to its training distribution that do not generalize to substantially different situations. Detecting and adapting to distribution shift remains an active research challenge.

Data efficiency limitations mean learning systems often need vastly more examples than humans. A child can learn to recognize giraffes after seeing a few examples. Computer vision systems might need thousands of giraffe images to achieve reliable recognition. Humans bring substantial prior knowledge and learning capabilities that current AI systems lack. They understand object permanence, three-dimensional space, and general properties of animals. Machine learning systems must learn these fundamentals from scratch through massive data exposure.

Algorithmic bias emerges when training data reflects historical prejudices or sampling problems. Amazon discovered that its resume screening AI discriminated against female candidates because it learned from historical hiring patterns that favored men. Facial recognition systems show higher error rates for darker-skinned individuals when trained on datasets that predominantly contain lighter-skinned faces. The system learns whatever patterns exist in training data, including patterns that humans would consider unfair or discriminatory. Addressing bias requires careful attention to data collection and evaluation across demographic groups.

Key Limitations of Machine Learning in AI

| Limitations of Machine Learning in AI | Implication |

|---|---|

| Rare Event Gaps | Insufficient training examples prevent learning reliable patterns for infrequent but important events |

| Causal Confusion | Systems identify correlations without understanding underlying cause-effect relationships |

| Adversarial Weakness | Carefully crafted inputs can trigger incorrect predictions that humans would easily avoid |

| Distribution Sensitivity | Performance degrades when deployment data differs significantly from training data patterns |

| Data Hunger | Learning systems require far more examples than humans need to achieve comparable accuracy |

| Embedded Bias | Models perpetuate prejudices and sampling problems present in historical training data |

Conclusion: Machine Learning in AI as the Foundation of Adaptive Intelligence

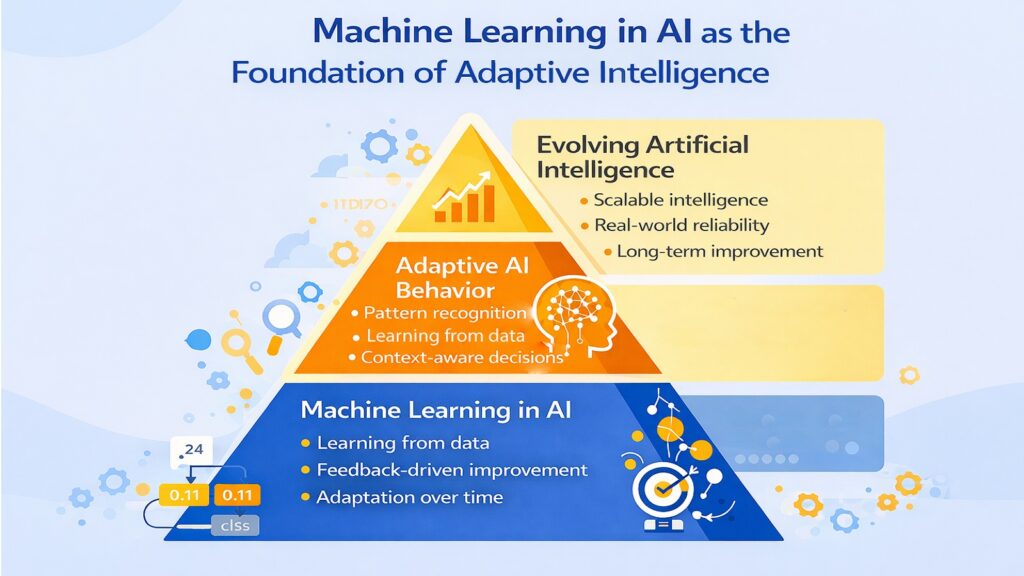

Machine Learning in AI represents more than a technical feature. It forms the foundation that enables artificial intelligence to evolve through experience rather than relying solely on human programming. The six learning mechanisms explored in this article collectively explain how modern AI systems adapt to complex environments, improve their performance over time, and handle situations their creators never explicitly anticipated.

The shift from adaptation through explicit programming to learning through data exposure changes what AI systems can accomplish. Systems that learn from experience handle nuance and variation that rule-based approaches cannot capture. Feedback loops enable continuous refinement based on real-world outcomes. Probabilistic reasoning manages the uncertainty inherent in messy real-world data. Scale allows learning systems to develop a sophisticated understanding from millions of interactions. These capabilities emerge from learning mechanisms rather than from increasingly elaborate programming.

Yet the exploration of limitations reminds us that Machine Learning in AI has meaningful boundaries. Rare events, causal reasoning, adversarial robustness, distribution shift, data efficiency, and bias all present ongoing challenges. These limitations do not diminish the technology’s value but do highlight areas requiring continued research and careful deployment practices. Understanding both capabilities and constraints enables more effective and responsible use of learning systems.

The contrast between Deep Blue and AlphaGo that opened this article illustrates the transformation. Deep Blue represented the peak of rule-based AI, powerful within its domain but fundamentally limited to what engineers programmed. AlphaGo demonstrated learning-based AI, discovering strategies through millions of self-play games that surpassed human expert knowledge. This difference extends far beyond game-playing to affect virtually every domain where AI gets deployed.

Machine Learning in AI continues advancing as researchers develop new learning algorithms, as computing power increases, and as datasets grow larger and more diverse. The fundamental principle remains constant: systems that learn from experience adapt more effectively than systems that follow fixed rules. This learning capability explains why artificial intelligence keeps expanding into new domains and why its performance continues improving over time. The learning mechanism itself, not just the applications it enables, represents the core innovation that makes modern AI different from earlier approaches.

Future Directions for Machine Learning in AI

| Future Directions for Machine Learning in AI | Potential Impact |

|---|---|

| Few-Shot Learning | Systems that learn effectively from minimal examples similar to human learning efficiency |

| Causal Inference | Moving beyond correlation to understand genuine cause-effect relationships in data |

| Continual Learning | Models that acquire new knowledge without forgetting previously learned information |

| Robust Learning | Systems resistant to adversarial attacks and distribution shift in deployment environments |

| Fairness Enhancement | Methods to detect and mitigate bias while maintaining predictive performance |

| Explainable Learning | Techniques that make model decisions interpretable and auditable for users |

Read More Tech Articles

- Quantum Computing: 6 Powerful Concepts Driving Innovation

- 8 Powerful Smart Devices To Brighten Your Life

- Canva Magic Media Review: 8 Reasons Why It is Unique

- 8 Big Ways Aged People Are Benefitting From Smartphones

- 8 Big Ways Female Entrepreneurs Are Benefiting From FinTechs

- 8 Best AI Image Generators That You Must Know About

- AI Images: 8 Simple Steps To Create Images Using AI

- Lenovo ThinkBook Plus Gen 5: Disrupting The Tech World

- Top 12 Windows Laptops of 2023: Power, Beauty and Agility