Table of Contents

Introduction: Intelligent Robotics – Where Artificial Intelligence Learns to Act

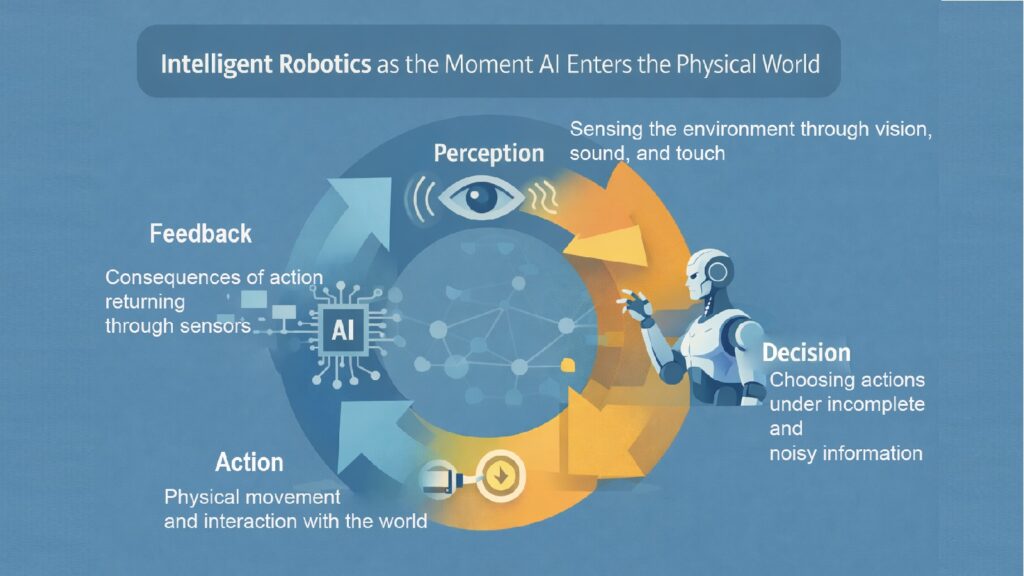

Artificial Intelligence becomes tangible when it enters physical space. That moment marks a crossing point. Abstract algorithms meet gravity, friction, and consequence. This is where Intelligent Robotics (or Cognitive Robotics) begins.

As a subfield of Artificial Intelligence, Intelligent Robotics matters because it forces intelligence to prove itself beyond simulations. A chess program can pause between moves. A language model can revise its output. But a robot navigating a hallway must decide while moving. Miss a step, and the metal hits the concrete. Intelligent Robotics strips away the safety of pure computation.

The challenge runs deeper than execution speed. In Intelligent Robotics, perception feeds uncertain data to reasoning systems that must trigger actions before complete information arrives. Sensors lie. Motors drift. The world refuses to wait. This pressure reveals what AI actually becomes when thinking, sensing, and acting converge in real time.

Intelligence in isolation remains theoretical. Intelligence in motion becomes testable. Intelligent Robotics occupies that testing ground where AI either adapts to reality or fails visibly. The stakes distinguish this subfield from others within Artificial Intelligence.

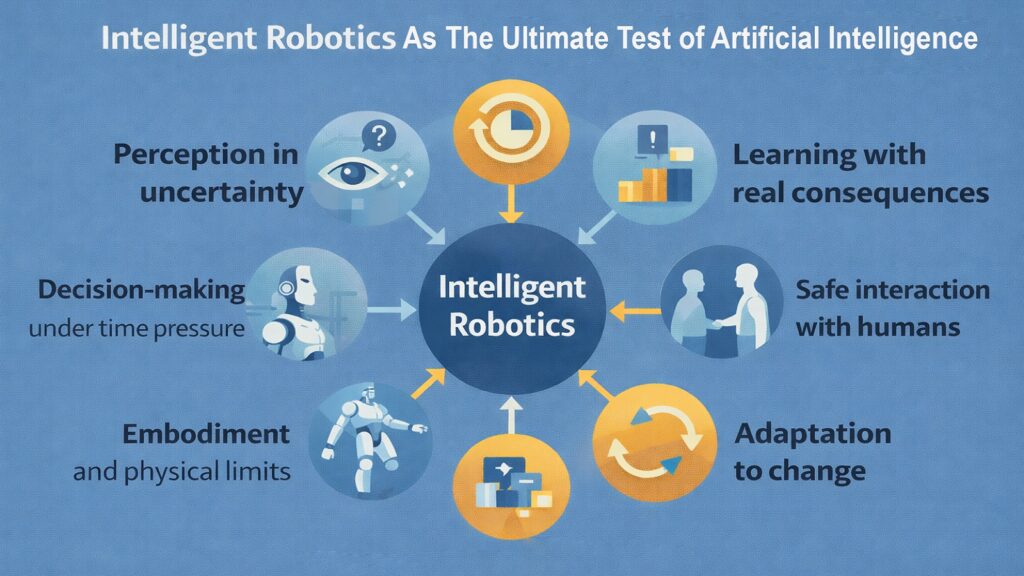

Six dimensions define how AI comes alive through Intelligent Robotics. Each dimension exposes a different constraint, a different truth about what intelligence requires when it must operate in physical space. Together, they show why Intelligent Robotics stands as a critical proving ground for the entire field of Artificial Intelligence.

Table: Intelligent Robotics Among AI Subfields – Core Characteristics

| AI Subfield | Defining Challenge |

|---|---|

| Machine Learning | Extract patterns from data to enable prediction and classification |

| Natural Language Processing | Transform human language into computational understanding and generation |

| Intelligent Robotics | Integrate perception, reasoning, and physical action in real-time environments |

| Computer Vision | Convert visual input into structured information about scenes and objects |

| Reinforcement Learning | Learn optimal behavior through trial, error, and reward signals |

| Expert Systems | Encode specialized knowledge into rule-based decision frameworks |

| Planning and Optimization | Find efficient solutions to complex problems with multiple constraints |

| Knowledge Representation | Structure information so machines can reason about relationships and facts |

1. Intelligent Robotics and Perception: How AI Learns to See and Sense Reality

Perception in Intelligent Robotics starts with imperfect signals. A camera captures light, but shadows obscure edges. A lidar beam measures distance, but reflective surfaces return false readings. Touch sensors register pressure, but vibration creates noise. The robot must build understanding from fragments.

Computer vision provides one layer of this understanding. Neural networks trained on millions of images can identify objects with impressive accuracy. But accuracy in a dataset differs from reliability in a warehouse. Lighting changes. Objects stack in unexpected ways. Dirt accumulates on lenses. Intelligent Robotics systems must reconcile what models predict with what sensors actually report.

Sensor fusion addresses this gap. Rather than trusting one source, Intelligent Robotics combines multiple inputs. Visual data merges with depth measurements and inertial readings. When sources disagree, algorithms weigh reliability. A stationary camera might trump a vibrating accelerometer. Context guides these choices. Perception becomes a continuous negotiation between competing signals.

The incompleteness never resolves. A robot turning a corner cannot see beyond the wall. Occlusion remains constant. Intelligent Robotics embraces partial information as the permanent condition. Planning must proceed despite missing data. Decisions must account for uncertainty baked into every measurement.

This uncertainty distinguishes Intelligent Robotics from digital AI systems operating on clean databases. Text classification works with complete sentences. Image recognition receives full frames. Intelligent Robotics works with sensor streams that flicker, lag, and contradict each other. The AI must construct a working model of reality, knowing that the model will always contain errors.

Signal interpretation requires more than pattern matching. A mobile robot detecting an obstacle must judge whether that obstacle is stationary, mobile, or transient. A gust of wind might move a curtain into the camera’s view. The robot needs temporal reasoning to distinguish objects from noise, persistence from flicker. Perception in Intelligent Robotics demands understanding how the world changes, not just what it contains at a single moment.

Table: Intelligent Robotics Perception Challenges – Key Factors

| Perception Factor | Impact on Intelligent Robotics |

|---|---|

| Sensor Noise | Forces probabilistic reasoning instead of deterministic conclusions |

| Environmental Variability | Requires adaptation to changing lighting, weather, and spatial conditions |

| Occlusion and Blind Spots | Demands prediction and memory to fill gaps in immediate sensing |

| Multi-Modal Integration | Needs fusion algorithms to reconcile conflicting data from different sensors |

| Real-Time Processing | Limits computational complexity of perception algorithms under time pressure |

| Calibration Drift | Requires continuous recalibration as hardware performance degrades over time |

2. Intelligent Robotics and Decision-Making: How AI Chooses Actions in Motion

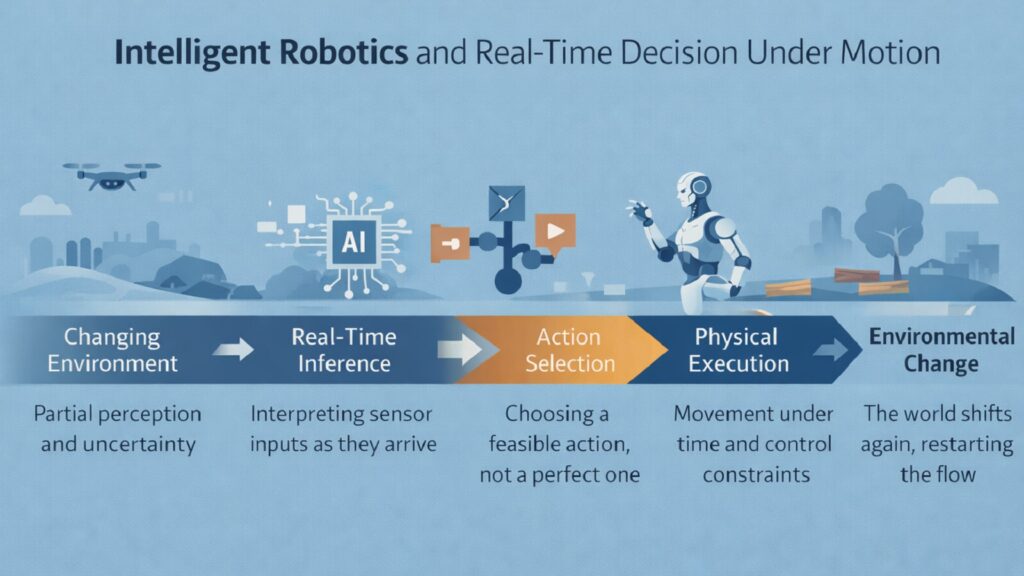

Decision-making in Intelligent Robotics happens while the world changes. A robotic arm reaching for a moving object cannot pause to reconsider. The target shifts. Timing matters. The decision window closes whether the AI finishes thinking or not.

This pressure transforms how intelligence operates. Traditional planning in Artificial Intelligence assumes a static problem space. Calculate costs, evaluate options, select the best path. Intelligent Robotics collapses that sequence. Evaluation and execution overlap. The robot must commit to actions before analysis completes.

Control theory provides tools for this challenge. Feedback loops let robots adjust while moving. A drone maintaining altitude measures its position hundreds of times per second and corrects for drift. The intelligence lies not in finding a perfect solution but in maintaining stability through continuous adjustment. Intelligent Robotics replaces optimization with regulation.

Real-time inference pushes AI models toward speed over precision. A deep learning model that takes five seconds to classify an image fails when a robot needs to react in milliseconds. Intelligent Robotics demands either faster models or different architectures. Some systems use cascaded decision-making where quick heuristics handle immediate threats while slower reasoning addresses strategic choices.

The concept of satisficing becomes essential. Herbert Simon introduced this term to describe decision-making that seeks satisfactory rather than optimal outcomes. Intelligent Robotics embodies this principle. A mobile robot choosing between two paths doesn’t need the mathematically shortest route. It needs a safe route computed quickly enough to matter. Good enough, delivered in time, beats perfect delivered too late.

Adaptability replaces pure planning. Pre-computed paths fail when unexpected obstacles appear. Intelligent Robotics systems must replan on the fly. Dynamic programming and local optimization become more valuable than exhaustive search. The AI learns to make the best decision available given current information and current constraints, knowing both will change momentarily.

Responsiveness distinguishes Intelligent Robotics from other AI applications. A recommendation algorithm can take minutes to update suggestions. A robot avoiding a collision has milliseconds. This temporal constraint shapes everything about how intelligence manifests in physical systems. The subfield reveals intelligence as a time-bounded phenomenon, not a timeless computation.

Table: Intelligent Robotics Decision Challenges – Core Dynamics

| Decision Aspect | Constraint in Intelligent Robotics |

|---|---|

| Planning Horizon | Must balance long-term goals against immediate action requirements |

| Computational Budget | Limited processing time forces approximation over exhaustive analysis |

| Information Completeness | Decisions proceed despite missing or uncertain state information |

| Action Reversibility | Many physical actions cannot be undone, raising decision stakes |

| Environmental Dynamics | Plans become obsolete as external conditions change during execution |

| Multi-Objective Tradeoffs | Must simultaneously optimize for speed, safety, energy, and task completion |

3. Intelligent Robotics and Learning: How AI Improves Through Physical Experience

Learning in Intelligent Robotics carries weight that digital learning avoids. When a language model generates a poor sentence, nothing breaks. When a robot executes a poor grasp, objects fall. Motors strain. Parts wear. Learning through physical experience means learning through consequence.

Reinforcement learning fits naturally here. Agents explore actions, receive rewards, and adjust policies. In simulation, millions of iterations run quickly. In Intelligent Robotics, each iteration consumes time, energy, and equipment lifespan. Researchers at UC Berkeley found that training a robot to manipulate objects could require thousands of attempts. Each attempt risks damage. Learning becomes expensive.

Imitation learning offers an alternative. Rather than discovering behaviors through trial and error, robots learn from demonstration. A human shows the task. The robot observes and attempts to reproduce it. This approach reduces the exploration burden but introduces new challenges. The robot must generalize from limited examples to varied situations. It must understand which aspects of the demonstration matter and which were incidental to that specific instance.

Feedback loops in Intelligent Robotics create tighter coupling between action and outcome than digital AI experiences. A robot learns not just from labeled data but from physics. Apply too much force, and a grip crushes the object. Apply too little and the object slips. The physical world provides immediate, honest feedback. This feedback shapes learning in ways that purely supervised learning cannot replicate.

The risk of learning under consequence makes simulation essential yet insufficient. Researchers train robots in virtual environments to avoid real-world failures. But simulation diverges from reality. Friction behaves differently. Contact dynamics simplify. Cognitive Robotics must bridge this reality gap. Techniques like domain randomization introduce variation during simulated training to build robustness. Still, the final learning must happen in physical space, where consequences teach lessons that simulation cannot.

Transfer learning becomes critical. A robot trained on one task must apply that knowledge to related tasks. Learning to grasp cylinders should help with grasping boxes. Intelligent Robotics pushes AI to extract principles rather than memorize solutions. The subfield demands generalization because physical training time is too precious to waste on learning each task from scratch.

This learning environment reveals AI’s limitations clearly. Mistakes manifest as broken tools, failed tasks, and damaged equipment. Progress becomes measurable in tangible outcomes rather than abstract metrics. Intelligent Robotics shows whether AI can actually improve through experience when that experience comes with real costs.

Table: Intelligent Robotics Learning Factors – Critical Elements

| Learning Factor | Challenge in Intelligent Robotics |

|---|---|

| Sample Efficiency | Physical trials are slow and costly compared to digital data processing |

| Safety During Exploration | Learning requires trying new actions that might cause harm or damage |

| Sim-to-Real Transfer | Behaviors learned in simulation often fail when deployed on actual hardware |

| Task Generalization | Must extract reusable skills from specific training experiences |

| Human Demonstration Quality | Imitation learning depends on consistent, reproducible human teaching |

| Long-Term Memory | Must retain and apply lessons across extended operational periods |

4. Intelligent Robotics and Embodiment: How AI Gains a Physical Presence

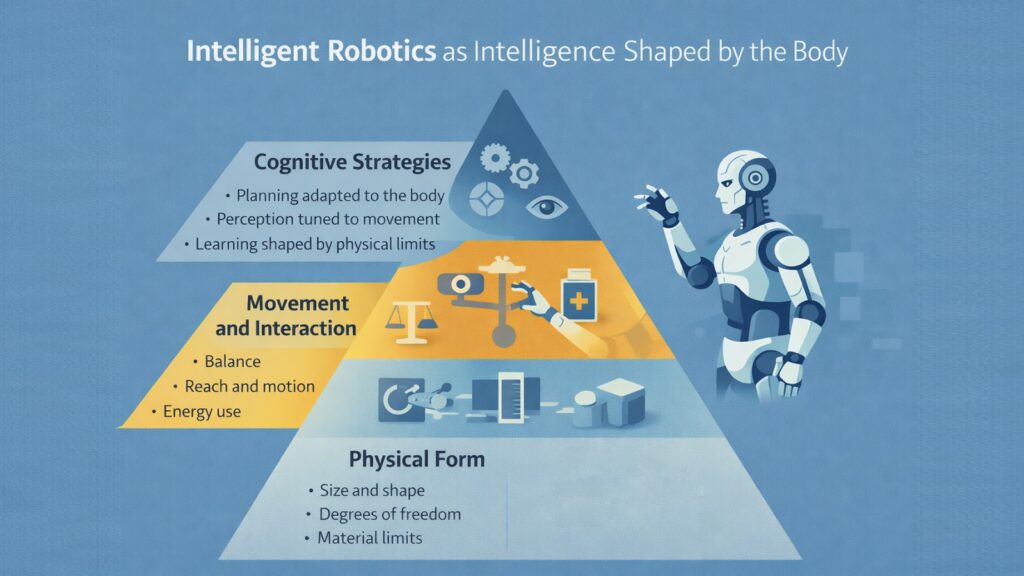

Embodiment means intelligence exists within form. The robot’s shape determines what it can sense, where it can reach, and how it can move. These physical boundaries become cognitive boundaries. Intelligent Robotics cannot separate the mind from the body.

A wheeled robot thinks differently from a legged robot. Wheels excel on flat surfaces but fail on stairs. Legs handle uneven terrain but move slowly on pavement. The hardware shapes strategy. Intelligence in Intelligent Robotics must work within the constraints that mechanics impose. Problem-solving becomes tied to what the body can actually do.

Movement constraints affect planning directly. A robotic arm with six joints can reach more positions than an arm with three joints. But more joints mean more complex control. More degrees of freedom multiply the search space for motion planning. Intelligent Robotics balances capability against computational cost. The body’s complexity determines how hard the brain must work.

Energy limits further constrain intelligence. A robot operating on battery power must consider energy consumption in every decision. Taking the scenic route drains power. Aggressive acceleration wastes charge. Intelligent Robotics systems incorporate energy awareness into their reasoning. The AI must think about its own survival, not just task completion.

Physical interaction demands different kinds of understanding than symbolic reasoning. Grasping an object requires predicting how fingers and the surface will interact. Pushing a door open requires estimating resistance. Walking on ice demands adjusting the gait. Cognitive Robotics forces AI to develop intuitive physics, a model of how materials, forces, and motion relate in the tangible world.

The body also provides opportunities. Sensors mount on physical structures, creating specific vantage points. Actuators enable direct manipulation. The robot can change its environment rather than just observing it. This agency distinguishes Intelligent Robotics from passive AI systems. Embodiment grants the power to act, which grants the responsibility to act wisely.

Morphology and cognition intertwine. Researchers studying insect intelligence have found that body structure offloads computational burden. A cockroach’s distributed nervous system and compliant legs let it run over obstacles without complex planning. The body absorbs environmental complexity. Intelligent Robotics increasingly borrows this insight, designing hardware that simplifies software requirements. Smart design reduces the cognitive load.

Table: Intelligent Robotics Embodiment Elements – Physical Constraints

| Embodiment Aspect | Influence on Intelligent Robotics |

|---|---|

| Morphological Design | Body structure determines accessible spaces and achievable movements |

| Actuator Capabilities | Motor power and precision limit force application and motion speed |

| Sensor Placement | Physical mounting positions create specific observational perspectives and blind spots |

| Weight Distribution | Mass affects stability, energy efficiency, and dynamic maneuverability |

| Material Properties | Compliance and rigidity influence interaction forces and contact dynamics |

| Form Factor | Overall size and shape constrain operational environments and social perception |

5. Intelligent Robotics and Adaptation: How AI Handles Uncertainty and Change

Adaptation separates functional Intelligent Robotics from fragile systems. The real world shifts constantly. Objects move. Surfaces wear. Lighting dims. A robot that works perfectly in controlled conditions but fails when conditions vary lacks the adaptability that defines robust intelligence.

Unpredictable environments test AI’s flexibility. A delivery robot navigating city sidewalks encounters pedestrians, pets, scooters, puddles, and construction barriers. No training dataset captures every variation. Intelligent Robotics must generalize beyond its preparation. The system needs resilience built into its architecture, not just breadth in its training data.

Partial failures create especially difficult scenarios. A robot might lose one sensor but retain others. A motor might degrade in performance without failing completely. Intelligent Robotics systems must detect these degradations and compensate. Fault tolerance requires self-awareness. The AI must monitor its own condition and adjust behavior when capabilities diminish.

Recovery mechanisms become essential. When a robot drops an object, it must recognize the failure and attempt retrieval. When navigation fails, it must backtrack or request help. Intelligent Robotics incorporates error handling as a core competency. The system assumes mistakes will happen and prepares responses in advance.

Novel situations demand creative adaptation. Researchers at MIT demonstrated robots that could reconfigure their approach when standard methods failed. A robot unable to grasp a slippery object tried pushing it against a wall to pin it. This kind of problem-solving requires the AI to reason about alternative strategies, not just execute learned behaviors. Intelligent Robotics pushes toward genuine flexibility.

Robustness emerges from redundancy and diversity. Multiple sensors provide backup when one fails. Multiple strategies offer alternatives when one proves inadequate. Intelligent Robotics systems build in margins of safety, accepting that perfect efficiency creates brittle performance. The system trades peak performance for consistent reliability.

Context sensitivity helps adaptation. A robot operating in a factory learns factory-specific patterns. A robot entering a home must learn household-specific patterns. Intelligent Robotics increasingly incorporates online learning that continues throughout operation. The AI adjusts to local conditions rather than relying solely on universal training. This local tuning represents adaptation at its most practical.

Table: Intelligent Robotics Adaptation Requirements – Survival Factors

| Adaptation Requirement | Implementation in Intelligent Robotics |

|---|---|

| Environmental Sensing | Continuous monitoring detects changes in operating conditions |

| Degradation Detection | Self-diagnostic systems identify performance decline in sensors and actuators |

| Strategy Switching | Multiple behavioral modes allow switching when primary approach fails |

| Graceful Degradation | Reduced functionality maintained when full capability becomes unavailable |

| Anomaly Response | Exception handling triggers safe behaviors during unexpected situations |

| Online Calibration | Real-time parameter adjustment compensates for drifting hardware performance |

6. Intelligent Robotics and Responsibility: How AI Acts Safely in the Real World

Responsibility in Intelligent Robotics means accounting for the presence among people. A social robot in a hospital must never harm a patient. An industrial robot must protect workers. An autonomous vehicle must prevent accidents. These requirements transcend task performance. Safety becomes the primary objective.

Constraint-based planning embeds safety into decision-making. Rather than finding the fastest path, the robot finds the fastest safe path. Constraints define boundaries that the system cannot cross. A mobile robot might maintain a minimum distance from humans. A surgical robot might limit force application. Intelligent Robotics treats these constraints as inviolable, not merely preferred.

Human-in-the-loop systems acknowledge that AI alone cannot guarantee safety. Critical decisions are passed to human operators. A warehouse robot encountering an ambiguous situation stops and requests guidance. This design accepts AI’s limitations and builds collaboration into the architecture. Intelligent Robotics recognizes when to defer rather than proceed with uncertainty.

Fail-safe behavior provides backstop protection. If sensors fail, the robot enters a safe state. If communication drops, default behaviors activate. Intelligent Robotics systems plan for degraded modes where reduced capability replaces full autonomy. The system must fail predictably and safely.

Transparency supports trust and accountability. Explainable AI techniques let robots communicate their reasoning. A care robot might explain why it chose a particular path or action. This transparency helps human supervisors understand robot behavior and intervene when necessary. Intelligent Robotics benefits from interpretability more than pure performance.

Ethical boundaries must be explicit. Asimov’s fictional laws of robotics captured this need decades ago. Modern Cognitive Robotics translates ethical principles into computational constraints. A robot cannot choose an action that violates safety regulations even if that action optimizes other objectives. Ethics become hard-coded limits, not suggestions.

Testing and verification become paramount. Before deployment, Cognitive Robotics systems undergo extensive validation. Edge cases get explored. Failure modes get analyzed. The subfield borrows from aerospace and medical device engineering, where reliability matters more than novelty. Responsible Intelligent Robotics prioritizes proven safety over cutting-edge capability.

This focus on responsibility makes Intelligent Robotics visible and accountable in ways purely digital AI avoids. A flawed recommendation algorithm frustrates users. A flawed robot harms people. This difference elevates standards across the subfield and pushes Artificial Intelligence toward more rigorous safety practices.

Table: Intelligent Robotics Safety Mechanisms – Protection Layers

| Safety Mechanism | Function in Intelligent Robotics |

|---|---|

| Physical Constraints | Hardware design limits speed, force, and range to safe operational bounds |

| Proximity Sensing | Detects nearby humans and triggers protective behaviors or emergency stops |

| Redundant Systems | Backup sensors and actuators maintain function if primary components fail |

| Authority Hierarchy | Human operators can override robot decisions and issue direct commands |

| Behavior Verification | Pre-deployment testing validates performance under diverse failure scenarios |

| Compliance Monitoring | Runtime checks ensure actions respect regulatory and ethical boundaries |

Conclusion: Intelligent Robotics – Why This Subfield Defines the Future of AI

Intelligent Robotics answers a question that Artificial Intelligence must face. Does intelligence work when reality imposes constraints? The subfield strips away the comfort of controlled environments and demands that AI prove itself in motion, under pressure, with consequences.

Other branches of Artificial Intelligence operate in domains where time stretches, and mistakes cost little. Intelligent Robotics operates where physics applies immediately, and errors create damage. This distinction transforms what intelligence means. Abstract reasoning becomes embodied cognition. Optimal solutions become satisfactory actions delivered quickly. Perfect training gives way to continuous adaptation.

The six dimensions explored here converge into one insight. Intelligent Robotics reveals intelligence as a physical phenomenon. Perception grounds thinking in sensor streams. Decision-making operates under time pressure. Learning happens through consequence. Embodiment constrains cognition. Adaptation enables survival. Responsibility governs action. Together, these dimensions show that intelligence in the real world differs fundamentally from intelligence in simulation.

This subfield matters not because robots replace human workers or because automation increases efficiency. Intelligent Robotics matters because it tests whether AI actually understands the world it claims to model. Can perception extract meaning from noisy signals? Can planning accommodate incomplete information? Can learning transfer across contexts? Can systems adapt when conditions change? These questions define whether Artificial Intelligence remains a laboratory curiosity or becomes a reliable technology.

The pushback from physical reality clarifies thinking. When ideas meet materials, forces, and time, weaknesses become obvious. Intelligent Robotics exposes those weaknesses and forces improvement.

Looking forward, Intelligent Robotics will increasingly define how Artificial Intelligence develops. As AI systems move beyond screens and servers into homes, streets, and factories, lessons of Intelligent Robotics become universal. Every AI operating in physical space must perceive accurately, decide quickly, learn safely, adapt continuously, and act responsibly. These capabilities originated in Intelligent Robotics but now represent requirements for the entire field.

The future of Artificial Intelligence is not purely digital. Intelligence will inhabit bodies, navigate spaces, manipulate objects, and interact with people. Intelligent Robotics shows what the future requires. The subfield reveals that intelligence, when tested by reality, becomes something richer and more demanding than algorithms alone can capture. That revelation positions Intelligent Robotics not as a peripheral application but as a central challenge that shapes the entire trajectory of Artificial Intelligence.

Table: Intelligent Robotics Impact on AI Evolution – Future Directions

| Impact Area | How Intelligent Robotics Shapes AI Development |

|---|---|

| Embodied Cognition Research | Physical interaction reveals cognitive principles invisible in purely digital systems |

| Real-Time AI Architectures | Time pressure drives development of faster, more efficient inference methods |

| Safety-Critical AI Standards | Physical consequences establish rigorous testing and validation protocols |

| Adaptive Learning Methods | Dynamic environments push development of continual and online learning techniques |

| Human-AI Collaboration Models | Physical presence demands natural interfaces and transparent decision-making |

| Resource-Constrained Intelligence | Energy and compute limits inspire efficient algorithms scalable to edge devices |

Read More Tech Articles

- How Qubits Work: 6 Essential Secrets of Quantum Hardware

- Natural Language Processing: 6 Powerful Ways AI Reads

- Quantum Computer Anatomy: 8 Powerful Components Inside

- Quantum Computing: 6 Powerful Concepts Driving Innovation

- 8 Powerful Smart Devices To Brighten Your Life

- 8 E-Readers: Epic Characters in a Library Adventure

- Smart TV Brands: 8 Epic Directors for Screen Magic

- Tablet Brands as Superheroes: 8 Amazing Tech Avengers

- Laptop Brands as Celebrities: Meet 8 Alluring Stars

- Reimagine 10 Best Smartphone Brands As Real Personalities